Stating the problem.

In my job, I do a lot of testing. And no matter how much organized we try to be, we end up with fewer machines than we would need to run all the tests that we want.

For some tasks, we can run MySQL Sandbox, and get the job done. But sometimes we need to make sure that applications and systems work well across the network, and we need to install and run systems on separate servers.

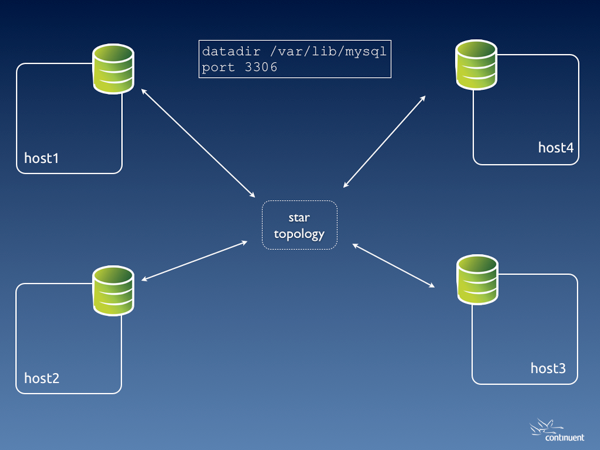

However, when you test replication systems, and every cluster takes three or four servers, you run our of available hosts very quickly. So you decide to use the clusters that are dedicated to automated testing to also run your own manual tests. Soon you realize that the tests that you are running manually are clashing with the automated ones, or with the ones that your colleagues are running.

A simple solution is installing additional sandboxes for the MySQL server in each host, and then run our operations against the new server. If we need more than one database server, MySQL Sandbox allows us to deploy them at will.

There are a few obstacles to overcome, for this approach to be useful:

- Installing sandboxes in remote servers is time consuming and not automated. When we need to do automated tests, this is a serious issue.

- By default, MySQL Sandbox works on the local host only. It can be installed to listen to remote clients, using an appropriate option.

- Sandboxes for testing replication need to be configured on the fly. It can be done manually, but also this issue can be solved by using advanced installation options.

- Installing via SSH can be challenging, when we need to provide several options on the command line, there are only two kinds of quotes available, and they can get messed up when we pass options across servers.

Default deployment - with one MySQL server installed through rpm or deb

Deployment with one additional sandbox per host

Deployment with two additional sandbox per host

Deployment with three additional sandbox per host

I faced this problem several times, and each time I was writing a quick shell script that would deploy the sandboxes in my servers. My O.S. user has access to all nodes in the cluster using SSH, and this makes the remote installation easier.

Remote deployment scripts

No manual installation is required. Following my experience with many different deployment in my clusters, I came up with a solid model that allows me to deploy remote sandboxes automatically. Initially, I had several sandboxes.sh scripts scattered around my code version control system. Then I implemented a general purpose script inside the Tungsten Toolbox. And finally I made an even more general script in the latest release of MySQL-Sandbox (3.0.31).

Remote database connection

This problem is easy to solve. By default, sandbox users are created as

msandbox@'127.%'There is an option that installs the sandbox users with access from anywhere in the network (

--remote_access='%'). We just need to pass this option correctly to the deployer. (See below) Sandbox configuration

MySQL Sandboxes can be configured exactly as you need them. During the installation, you can pass options that change the configuration file according to your need. The syntax is :

make_sandbox tarball_name.tar.gz -- -c option=value -c another_option=valueThe options passed with

'-c' will be written to the configuration file, and used since the first start. Passing complex options across servers

This is probably the most annoying problem. If you run

ssh myhost "some_command --option='value' "and there are quotes inside 'value', you may get an error, and a difficult one to debug.

The solution to this problem is to split the process into three phases:

- Create a deployment shell script, generated dynamically inside the remote_sandboxes script. This script will have all the needed quotes in the right places. There won't be any need of further quoting.

- Send the script to the remote host. Note that we generate a different script for every host.

- ssh to the remote host, and execute the deployment script remotely. The script will run all the commands you have prepared, without risk of contaminating the command line with unwanted quotes.

An additional advantage of this approach is that you can include some checks inside the dynamically generated script. Things that could not be easily done with an SSH call.

Try it out

The new script is installed along the rest of MySQL Sandbox executable, and it is called deploy_to_remote_sanboxes.sh. For example:

deploy_to_remote_sanboxes.sh -l host1,host2,host3 -P 19000 -d myGA -t ~/downloads/mysql-5.6.10-linux-blah-blah.tar.gz

This command will install a sandbox called 'myGA' with MySQL 5.6.10, using port 19000 and the given tarball.

Assumptions:

- You must have ssh access between your current O.S. user and a corresponding user in the remote hosts. It is recommended that the user access through a SSH key.

- MySQL Sandbox should be installed in all remote hosts.

- The port that you have chosen for the sandboxed database server must be open (and not already used) in all hosts

- If you have an existing sandbox with the same name it will be overwritten.

2 comments:

The example command looks wrong

deploy_to_remote_sanboxes.sh -r host1,host2,host3 -P 19000 -d myGA -t ~/downloads/mysql-5.6.10-linux-blah-blah.tar.gz

Should it not be

deploy_to_remote_sandboxes.sh -l host1,host2,host3 -P 19000 -d myGA -t ~/downloads/mysql-5.6.10-linux-blah-blah.tar.gz

Neil,

Thanks. Fixed!

Post a Comment